Many of us are blurring the lines between work and non work. Working from home is making this difficult, but with all the benefits I would not want it any other way. Organisations are, whilst being supportive, also enabling us to use our own devices for work too, and while this is often limited, the mobile phone is across companies seems to have been infiltrated.

Why is having work apps on your personal phone bad?

It’s not bad per se, but there are some privacy concerns, control concerns, attention concerns etc. I am not going to cover this here, but generally the pros often outway the cons. For example I personally really like being able to glance at my phone and see what meeting I have next.

Silencing notifications and setting active schedules can help with the distractions, but this does not solve the issue of having work data on your personal phone.

The rest of this post is going to be a little bit technical, but is pretty much just installing an app, NO root or jail breaking etc. is required however.

What is an Android Work Profile?

Before I talk about Shelter, I need to talk about Android work profiles.

For companies that have chosen to use Google’s suite of enterprise apps (Google Workspace), there is a way to manage company mobile phones and allow employees to install work apps on there personal mobile phones. It’s called Google Mobile Management: MDM solution | Google Workspace.

One of the core features is to separate business apps from personal apps. Having worked for a company that did this, and an Android user, I can say that this is the best implementation of this I have seen. It is implemented with a work profile. A work profile is a distinct area managed by your organisation and where separate copies of work apps can be installed. As it is distinct from the usual area, it can be remote wiped without impacting personal apps or functionality, copying to and from work and personal profiles can be controlled and more.

Unfortunately, on some Android phones that use heavily modified versions of the Android OS, work profiles are sometimes removed. Additionally, I have yet to find any android device where work profiles are enabled for users to setup without Google Workspace accounts and licences. This is where Shelter comes in.

Shelter

Using the Description from the main download site where you can get the APK Shelter | F-Droid – Free and Open Source Android App Repository

“Shelter is a Free and Open-Source (FOSS) app that leverages the “Work Profile” feature of Android to provide an isolated space that you can install or clone apps into.”

Shelter can either be installed and kept up to date using F-Droid (requires an account), or you can simply download and install the APK. Use the link above to do this. Once installed, there is a straightforward setup process to follow in the Shelter app, and you will soon be up and running with a Work Profile.

More about Shelter can be read in the below links:

- GitHub – achalmgucker/Shelter: A tool for Android to enable a separate work profile made by PeterCxy. See original code site.

- PeterCxy/Shelter: Isolate your Big Brother Apps, using Work Profiles – Angry.Im Software Forge

It is worth noting that this is project was authored by a single developer, but the developer notes:

“The author still relies on Shelter for his daily life, so Shelter will not become abandonware in the forseeable future.”

Installing Apps in your Shelter work profile

By far the easiest way to do this is to install the app on your app you need on your personal profile via the Google Play store, without signing in to any work accounts, and then copy it from your personal profile to your work profile using the Shelter app. Afterwards if you have no use for the non work version, you can uninstall the personal profile version of the app (note that to do updates to the apps occasionally you may need to repeat this).

Your work profile is an available option from the app draw, so this is where you can open the work apps and login with work accounts. You always know when you are using a work profile app as it has a small briefcase on the app icon. All work profile apps are sandboxed and distinct from the personal profile versions of the app. No data is shared across the apps, there is no chance of accidently merging data across them.

Freezing work profile apps

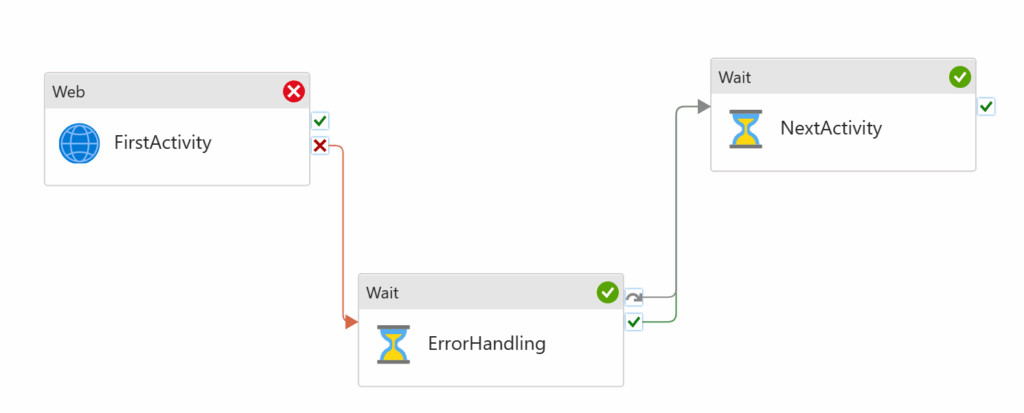

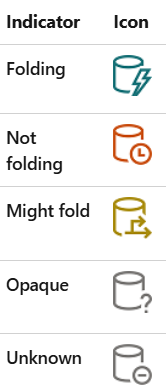

One really great feature of Shelter, or rather the work profile, is to freeze work apps. Freezing the apps completely cease their function. They will not send notifications, download in the background using your data or use battery life. They are also completely inaccessible. Unfreezing them is virtually instant.

Freezing can be done manually, or you can set a schedule for this to be done automatically. Going on holiday, just freeze all your work apps. Arrived and realised you forgot to put your out of office on? Unfreeze, do what you need to, then freeze and forget.

Improves Microsoft apps significantly

Where I currently work, they using Microsoft applications and the soon to be replaced InTune to manage work applications. The thing is, without an Android work profile, the apps you use are the same. This is fine if you don’t use any Microsoft apps personally, but if you do, you will find your self swapping between accounts and often, when opening links you will get error messages as it tries to open a work account link in your non work account account or browser. It is just messy.

Having a work profile changes all this and makes usage basically seamless. It is a significant improvement.

TL DR

If you are using work apps on your personal Android phone, you should give Shelter a try.